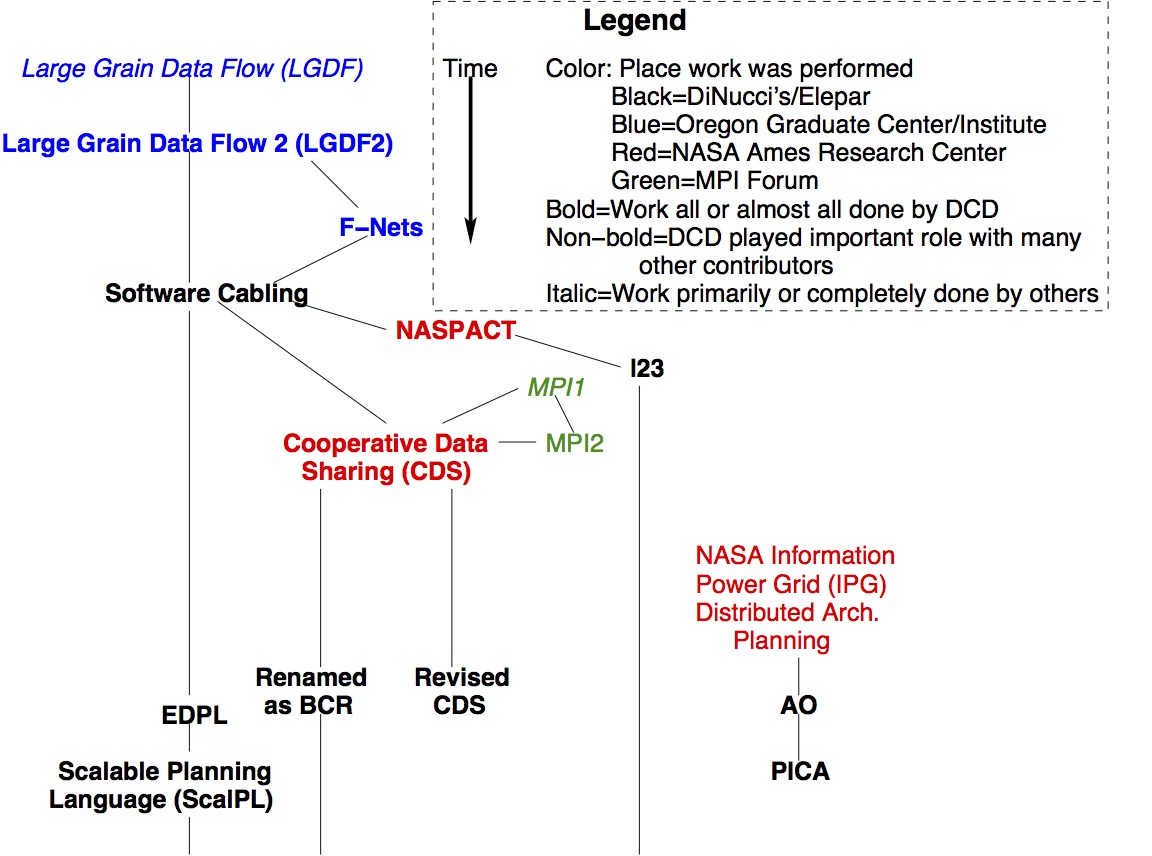

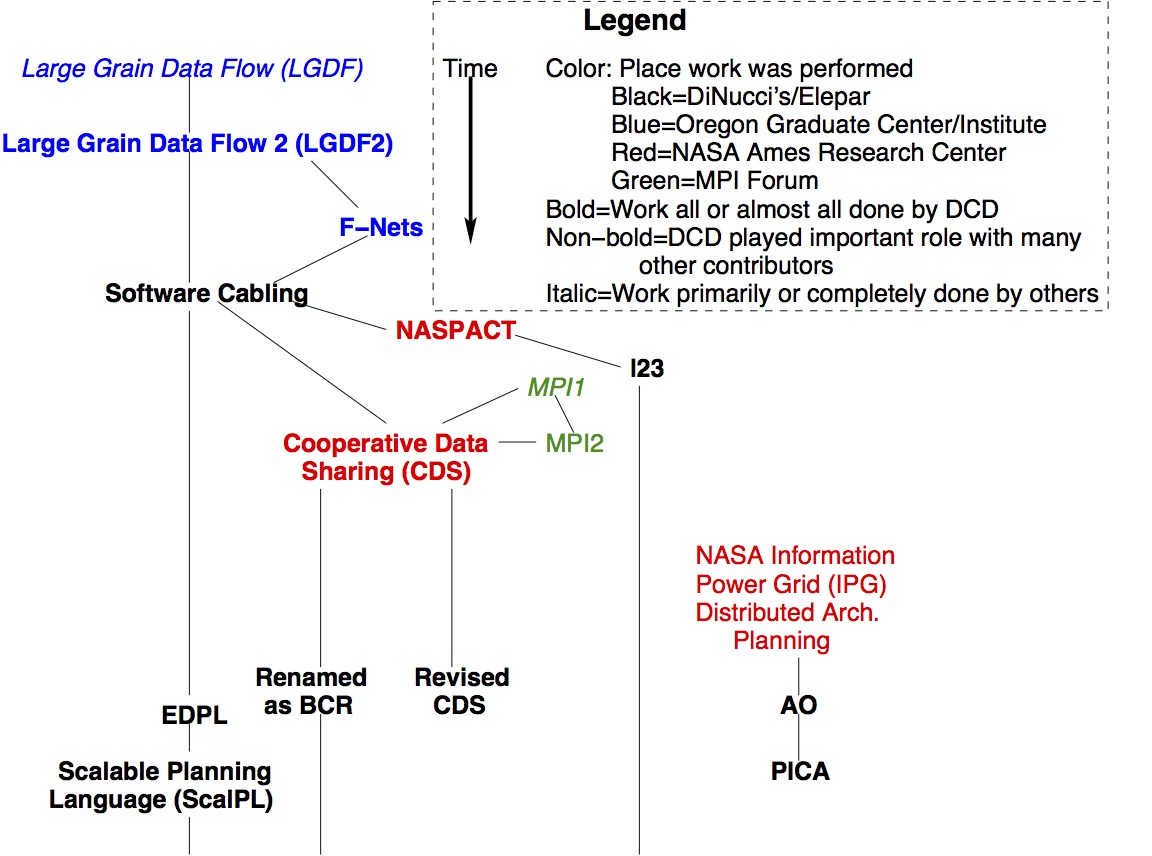

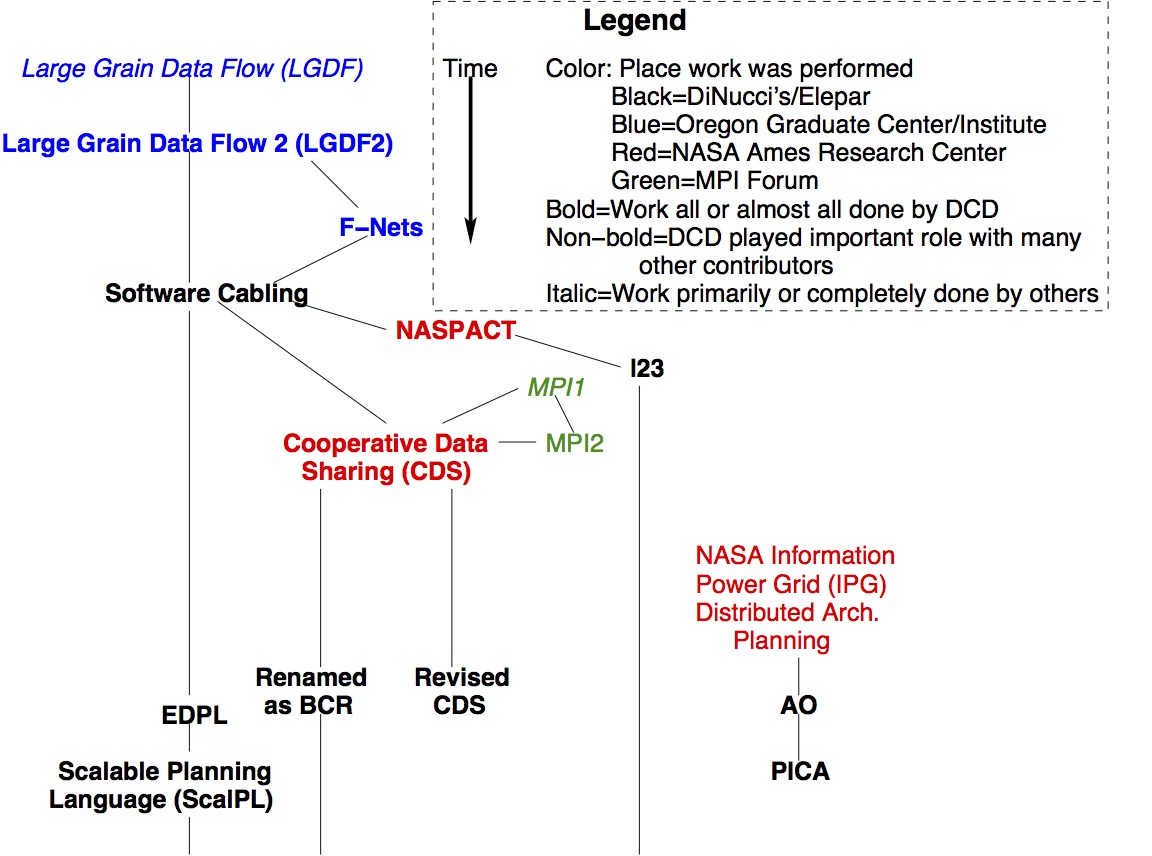

History/Lineage of Elepar's 3 Primary Technologies

ScalPL (Scalable Planning Language)

- R.G.Babb II proposed Large Grain Data Flow (LGDF), first at Boeing, then at Oregon Graduate Center (later Institute), for engineering software for shared-memory multiprocessors.

- As his grad student at OGC/OGI (Oregon Graduate Institute), David DiNucci (DCD) looked into whether it could be made more portable to also distributed-memory machines. DCD developed LGDF2 as a result, more portable and formal than LGDF, but also simplified and therefore lacking some practical programming features. DCD implemented F-Nets on some shared-memory platforms.

- The skeleton of LGDF2 became the formal computational model F-Nets, the basis of DCD's PhD dissertation.

- After finishing grad school and recognizing that the F-Nets model lacked features for practical programming, DCD independently developed Software Cabling (SC), to add some desired software engineering features consistent with the model.

- Upon starting at NASA (NAS Division) ~2000, DCD proposed a project based on SC, called NASPACT ("NAS Parallel Algorithm Construction Tool"). After NASA considered the proposal for one year (allowing no development, but requesting several internal white papers), NASA declined to fund a prototype.

- Shortly thereafter, DCD developed parts of the same tools independently at home, as "l23" (abbreviation for "parallel tools"), and published incomplete SC language descriptions online at elepar.com ("cheat sheet" and tutorial) and in a peer reviewed paper (at PDSE/ICSE97) while at NASA.

- Software Cabling continued to evolve slowly over the years, and in about 2005, at Elepar, its internal nomenclature and terminology was changed and the language renamed to EDPL, and then again shortly after to adopt the ScalPL (Scalable Planning Language) name.

- The first complete documentation with the ScalPL name and terminology was published in the book "Scalable Planning" in 2012, though it (and l23) has continued to evolve since.

- More recently, DCD has created some overview videos and white paper describing F-Nets and ScalPL.

CDS (Cooperative Data Sharing)

- When the original MPI forum concluded, it had not addressed many kinds of portable communication, like those that would be optimal for Software Cabling, so DCD proposed to NASA developing CDS, which would encompass both MPI and SC-related communication, making it more portable and efficient than MPI for a wide variety of NASA workloads. NASA approved, CDS was born.

- DCD implemented CDS over UDP sockets on a number of networks available on NASA platforms for benchmarking.

- When the MPI2 forum began, and proposed implementing one-sided communication, DCD joined that committee for the partial purpose of seeing what features of CDS might be integrated into the MPI2 standard. The committee felt that the liveness constraints of CDS were sufficiently different from those in MPI that it could not be reasonably accommodated.

- Upon leaving NASA, DCD obtained rights to the CDS software for Elepar from NASA, renamed it BCR ("Before CDS Redesign"), and published it as open source, and then separately expanded the CDS definition to include features to be available in MPI and MPI2 (e.g. communicators, collective communication). This new CDS definition was never fully implemented.

PICA (previously AO)

- In his last year or so at NASA ~1997, DCD led the

Distributed Architectures planning team for the NASA Information Power Grid,

a joint NASA/NSF project for tying together NASA's national data,

computational, instrumental, and personal resources. The team

consisted of leading researchers in parallel and grid technology from

around the country.

- On success of that plan (i.e. funding of the project by NASA Headquarters),

DCD saw the ways that his Software Cabling technology could fit into that

bigger picture, and came up with ideas for how the overall architecture could

work, but NASA went in other directions.

- Upon leaving NASA to start Elepar, DCD further explored this approach of

allocating and paying for resources, first calling it Abstract Owner (or AO),

and then PICA (People, Instruments, Computers, and Archives).

- At this time, the Peer-to-Peer Working Group (P2PWG) was taking off,

and DCD got involved with that as well as the National Grid Forum (NGF).

Rather than refer to the

target platform of PICA as either Grids or P2P, he first referred to it as P2G

(Peer-to-Grid), and then more correctly, as

DRCs, Distributed Resource Collectives.

- Although a broad outline of the basic ideas involved in PICA was published

to the Elepar website, and DCD co-published a paper with this and other

approaches to the GRID 2000 conference, it has not been developed further.